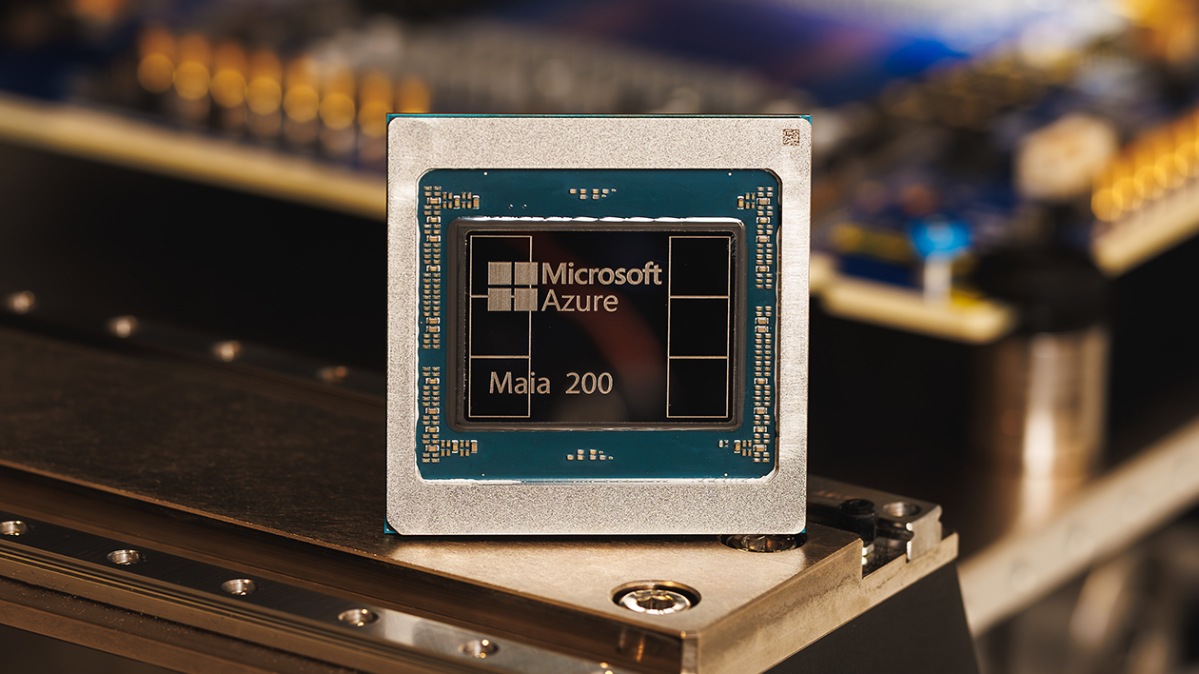

Microsoft has announced the launch of its latest chip, the Maia 200, which the company describes as a silicon workforce designed to scale AI inference.

In 200, which follows the company Maia 100 is released in 2023is technically equipped to run powerful AI models at faster speeds and with greater efficiency, the company said. Maia is equipped with more than 100 billion transistors, delivering more than 10 petaflops of 4-bit precision and approximately 5 petaflops of 8-bit performance—an increase over its predecessor.

Inference refers to the computational process of running a model, as opposed to the computation required to train it. As AI companies mature, inference costs have become a significant part of overall operating costs, leading to renewed interest in optimizing the process.

Microsoft hopes that the Maia 200 can be part of that optimization, making its AI business run with less noise and lower power usage. “In practical terms, one Maia 200 nodes can effortlessly run the largest models today, with plenty of headroom for even larger models in the future,” the company said.

Microsoft’s new chip is also part of a growing trend of tech giants turning to self-designed chips as a way to reduce dependence on NVIDIA. cutting-edge GPUs has become increasingly important to the success of AI companies. Google, for example, has a TPU, a tensor processing unit—which is not sold as a chip but as computing power can be accessed through the cloud. Then there’s Amazon Trainium, the e-commerce giant’s own AI accelerator chip, which just open the latest versionin Trainium3, in December. In each case, TPUs can be used to offload some of the computations that would otherwise be assigned to NVIDIA GPUs, reducing overall hardware costs.

With Maia, Microsoft is positioning itself to compete with these alternatives. In Monday’s press release, the company noted that Maia delivers 3x the FP4 performance of Amazon’s third-generation Trainium chip, and FP8 performance on top of Google’s seventh-generation TPU.

Microsoft says that Maia has been working hard to generate the company’s AI model from the Superintelligence team. It has also supported the operation of Copilot, its chatbot. On Monday, the company said it has invited various parties — including developers, academics, and frontier AI labs — to use the Maia 200 software development kit in their workloads.

Techcrunch event

San Francisco

|

13-15 October 2026