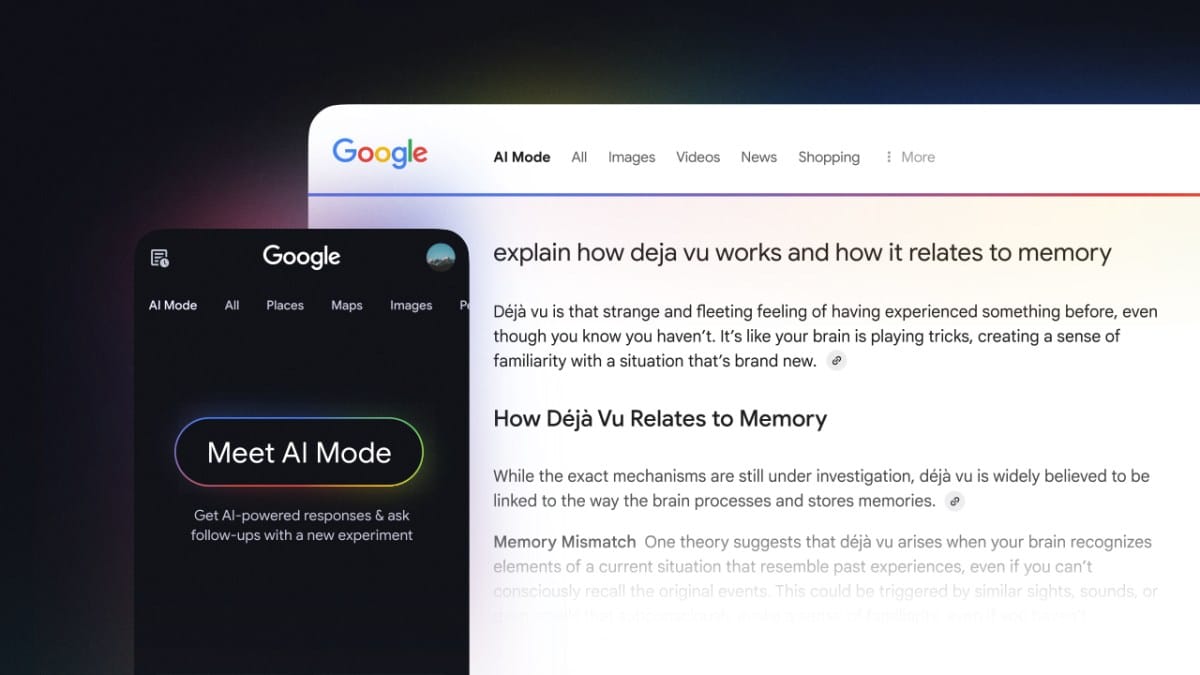

Google launches the experimental “AI new” on the search that appears in popular services like perplexity AI and openai chatGpt Search. The tech giant announces today Wednesday that the new mode is designed to allow the user to ask a complex, multi-sectioning question and follow the direct topics in Google search.

The AI mode plays to google one Ai Ai Ai Ai ai perperrgers start this week and accessible through Lab searchGoogle’s experimental arm.

Features using custom versions of Gemini 2.0 And especially for the questions that require an exploration and comparison to consideration, thinking, and multimodal capabilities.

For example, you can ask: “What is the difference feature sleeping feature between smart ring, smartwatch, and track tracking?”

Ai mode can then provide detailed comparisons of what is processed by each product, and the link to the article that pulls the information. You can then ask you to follow, such as: “What happened to the heart boxing level during sleep?” to continue searching.

Google said that in the past, would certainly take several questions to compare the choice or exploring new concepts through traditional search.

With the AI mode, you can access the web content but also taps the real time like a graph of knowledge, information about real data, and shopping data for billiar products.

“What the trial is to ask about twice the traditional search, and it also follows the product in the search, and we think, making it more opportunities to do Google Search more.”

Stein noted that Google has reboot Summary AIFeatures that displays photos on the results page, have heard that the user wants to get the type of AI-powered Answer for more brows, the company introduces ai mode.

The Fashion Ai Works with the “Query-Out Query-Out Technical Engineering” that are different multiple sets of data related to data sources in order to produce unknown results.

“The model has been studied for prioritious prioritratizing and backup what is spoken through a verifiable information, and it is very important, and pay extra attention to sensitive areas,” SEJARE Stein. “So this might be health, as an example, and where it may be the best and the most important link to make it possible, as a new technology and cutting the new Edge Ai Technology released.”

Because of this early experiment, Google records that it will continue to filter the user’s experience and use the function. For example, the company is planning to create more visual experiences and surface information from various sources, such as the user created content. Google teaches the model to determine when adding the link in response (eg ticket booking) or when the image or video order.

Google One Premium customers can access AI mode by selecting the search label and enter the question in the search bar and tap “AI Mode” tab. Or, they can navigate directly to Google.com/aimode to access the features. On the phone, they can open the Google icon and tap the “AI mode” icon below the search bar on the Home screen.

As part of this announcement, Google also shows that Gemini 2.0 has been release in the USA in the US, the company will now be able to help solve questions, starting coding, and multimodal questions. In addition, Google announced the user does not have to sign in to access the summary AI, and the current feature is now rolled to a teenager. Also.