The U.S. men’s and women’s Olympic sprint teams have won more gold medals than any other country in history, but the men’s 4×100-meter relay team has suffered four straight losses. disastrous defeat over the past twenty years. Why? It’s definitely a taste of crunch time when runners have to instinctively step back and trust their teammates enough to put the baton perfectly in their hands.

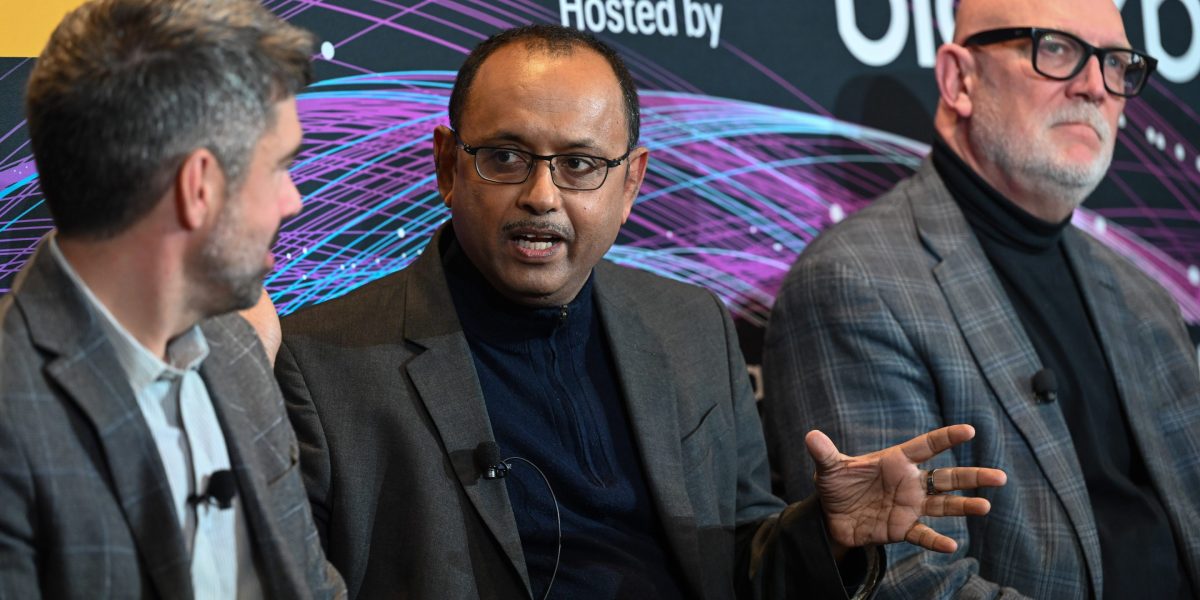

Sudip Datta, chief product officer at AI software company Blackbaud, said the image accurately captures what’s happening in artificial intelligence today. Companies are moving rapidly to build the fastest, most powerful systems possible, but there is a severe lack of trust between the technology and the people who use it, causing any new innovations or efficiencies to completely fail at the handoff.

“How many times has the U.S. had the fastest athlete but ended up losing the 4×100 relay?” Datta asked an audience of experts at a Fortune roundtable AI brainstorming activity This week in San Francisco. “Because trust doesn’t exist, the runner blindly takes it away from the person passing the baton.”

Datta said reflexive regressions in belief alone could differentiate between winners and losers in AI adoption. A significant challenge in building trust is that many companies today view building trust as a compliance burden, slowing everything down. He told the Brainstorm AI audience that the opposite is true.

“Trust is actually a driver of revenue,” Datta said. “It’s an enabler because it drives further innovation because the more customers trust us, the faster we can accelerate the innovation journey.”

Scott Howe, president and CEO of data collaboration network LiveRamp, outlined five conditions that need to be met to build trust. He said regulators have done a reasonable job in the first two aspects, but “we still have a long way to go” in the remaining three. The five conditions include: transparency of how the data will be used; control over your data; value exchange for personal data; data portability; and finally, interoperability. Regulations include the European Union’s General Data Protection Regulation (General Data Protection Regulation) has made some modest progress, but Howe said most people “are not getting anywhere near fair value from the data we contribute.”

“Instead, the really big companies, some of them on the stage today, have shaved off value and made a lot of money,” Howe said. “The last two, we’re not making progress either as an industry or as a business.”

own data

In Howe’s vision for the future, he sees data being treated as a property right and people being entitled to fair compensation for their use.

“LLM doesn’t own my data,” Howe said, referring to the large language model. “I should own my data, so I should be able to get Amazon arrive Googleand from Google to Walmart It should travel with me if I let it,”

However, Howe said major tech companies are actively resisting portability and interoperability, creating data silos that trap customers in the current ecosystem.

Exceed Personal data and potential consumer rights issues, trust challenges take different forms within different companies, and each company must decide what its own AI systems can safely access and what tasks they can complete autonomously.

Spencer Beemiller, Innovation Officer, Software Company Immediate servicesaid the company’s customers are trying to determine which AI systems can operate without human oversight, a question that remains largely unanswered. ServiceNow can help organizations track their AI agents the same way they used to monitor infrastructure, he said, by tracking what the systems are doing, what they have access to, and their lifecycle.

“We’re trying to help our clients identify which points are truly important in creating autonomous decision-making,” Beemiller said.

Problems like hallucinations, where AI systems confidently provide fictitious or inaccurate information to answer questions, require significant risk mitigation processes, he said. ServiceNow solves this problem by using what Beemiller calls an “orchestration layer,” where queries are directed to specialized models. Small language models handle enterprise-specific tasks that require more precision, while larger models manage natural conversational projects, he said.

“So it’s kind of like, ‘Yes, and’ certain agent components are going to talk to specific models that are only trained on internal data,” he said. “Others called from the orchestration layer will be abstracted into a larger model to be able to answer questions.”

Still, many fundamental questions remain unresolved, including those of cybersecurity, critical infrastructure, and the potentially catastrophic consequences of AI errors. Compared to other areas of tech, there’s an inherent tension between moving fast and doing it right.

“If we can earn trust, speed will follow,” Datta said. “It’s not just about running fast, it’s about maintaining trust along the way.”

Read more about Brainstorming AI: